Jerry Yoakum's thoughts on software engineering and architecture from experience working with code, computer science, python, java, APIs, NASA, data mining, math, etc.

Wednesday, November 23, 2016

Maintain Conceptual Integrity

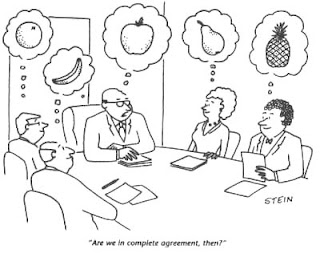

Conceptual integrity is an attribute of a quality design. It implies that a limited number of design "forms" are used and that they are used uniformly. Design forms include the way components inform their callers of error conditions, how the software informs users of error conditions, how data structures are organized, mechanisms for component communication, documentation standards, and so on.

When a design is complete, it should look as if one person created it all, even though it is the product of many devoted people. During the design process, there are often temptations to diverge from accepted forms. It is okay to give in to such temptations if the justification is for additional integrity, elegance, simplicity, or performance of the system. It is not okay to give in solely to ensure the designer x has left his mark on the design. Ego satisfaction is not as important as conceptual integrity.

Labels:

architecture,

design,

software-engineering

Location:

Springfield, MO, USA

Saturday, November 19, 2016

Conceptual Errors Are More Significant Than Syntactic Errors

When creating software, whether writing requirements specifications, design specifications, code, or tests, considerable effort is made to remove syntactic errors. This is laudable. However, the real difficulty in constructing software comes from conceptual errors. Syntactic errors often look like silly mistakes that can be laughed off. In contrast, developers often feel flawed, or incompetent, when a conceptual error is located. No matter how good you are, you will make conceptual errors. Look for them.

Ask yourself key questions throughout the development process.

- When you read the requirements ask yourself, "Is this what the customer really wants?"

- While you are designing a solution, "Will this architecture behave appropriately under stress?" or "Does this algorithm really work in all situations?"

- During coding, "Does this code do what I think it does?" or "Does this code correctly implement the algorithm?"

- During test, "Does the execution of this test convince me of anything?"

Reference:

Brooks, F., "No Silver Bullet: Essence and Accidents of Software Engineering," IEEE Computer, April 1987.

Labels:

architecture,

design,

software-engineering

Location:

Springfield, MO, USA

Friday, November 18, 2016

Use Coupling and Cohesion

Coupling and cohesion were defined in the 1970s by Larry Constantine and Edward Yourdon. They are the best ways we know of measuring the inherent maintainability and adaptability of a software system. In short, coupling is a measure of how interrelated two software components are. Cohesion is a measure of how related the functions performed by a software component are. We want to strive for low coupling and high cohesion. High coupling implies that, when we change a component, changes to other components are likely. Low cohesion implies difficulty in isolating the causes of errors or places to adapt to meet new requirements. Constantine and Yourdon even provided a simple-to-use way to measure the two concepts. Learn it. Use the measure to guide your design decisions.

You want:

- low coupling

- high cohesion

The image above is a special forces team that gives an alternative example of low coupling with high cohesion. The sniper team is another such example.

Labels:

architecture,

design,

software-engineering

Location:

Springfield, MO, USA

Thursday, November 17, 2016

Design for Change

During software development, we regularly uncover errors, new requirements, or the results of earlier miscommunications. All these cause the design to change even before it is baselined. Which should be expected - change during development is inevitable. Bersoff, Henderson, and Siegel defined the first law of system engineering as,

No matter where you are in the system life cycle, the system will change, and the desire to change it will persist throughout the life cycle.Furthermore, after baselining the design and delivering the product, even more new requirements will appear. All this means that you must select architectures, components, and specification techniques to accommodate major and incessant change.

To accommodate change, the design should be:

- Modular - composed of independent parts that can be easily upgraded or replaced with a minimum of impact on other parts.

- Portable - easily altered to accommodate new host machines and operating systems.

- Malleable - flexible to accommodate new requirements that had not been anticipated.

- Of minimal intellectual distance.

- Edsger Dijkstra defined intellectual distance as the distance between the real-world problem and the computerized solution to that problem. Richard Fairley argues that the smaller the intellectual distance, the easier it will be to maintain the software.

- Under intellectual control.

- A design is under intellectual control if it has been created and documented in a manner that enables its creators and maintainers to fully understand it.

- Such that it exhibits conceptual integrity.

- Conceptual integrity is an attribute of a quality design. It implies that a limited number of design "forms" are used and that they are used uniformly. When a design is complete, it should look as if one person created it all, even though it is the product of many devoted people.

* Image taken from http://www.greenville-home-remodeling.com/010-greenville-home-remodel-rare-design-before-and-after-kupersmith-front-elevation/. If you like it, check out the cool remodels they do.

Labels:

architecture,

design,

software-engineering

Location:

Springfield, MO, USA

Wednesday, November 16, 2016

Design for Maintenance

The largest post-design cost risk for non-software products is manufacturing. This makes design for manufacturability is a major design driver.

The largest post-design cost risk for software products is maintenance. Unfortunately, design for maintainability is not the standard for software. People often ask what software maintenance is and they'll joke that you don't have to grease code. Well, you don't have to literally grease code but figuratively greasing code to ensure that it is easy and quick to change does need to happen.

For example, at my workplace Apache Ant was a primary build tool. It has been replaced with Gradle and as a consequence when development teams have to work on a service that is built with Ant they complain. The maintenance work here would be to change the build code from ANT to Gradle to ensure that work is easy and quick. Of course, whether to make that change depends on whether that service is getting modified often enough to justify the cost. The architectural question is was this service designed in such a way that changing the build code to use a different build tool easy. We approached this by ensuring that the service's build code was loosely coupled to the service source code.

A designer has the responsibility to select an optimal software architecture to satisfy the requirements. Obviously, the appropriateness of this architecture will have a profound effect on system performance. However, the selection of this architecture also has a profound effect on the maintainability of the final product. Specifically, architecture selection is more significant than algorithms or code as far as its effect on maintainability.

Labels:

architecture,

design,

software-engineering

Location:

Springfield, MO, USA

Sunday, November 06, 2016

Design for Errors

Errors in software are to be expected. Since you expect error you should make design decisions to optimize the likelihood that:

- Errors are not introduced.

- Use code reviews to catch functional errors.

- Use static analysis to catch coding errors.

- Errors that are introduced are easily detected.

- Ensure that your code is setup log unexpected errors.

- Use tools like AppDynamics to view the ranking of errors over different time frames.

- Use tools, such as, Splunk to graph errors by type over time. Especially, critical to do this before and after deploying to highlight new errors introduced by the latest deployment.

- Errors that remain in the software after deployment are either noncritical or are compensated for during execution so that the error does not cause a disaster.

- This is all about testing that things work in a way that you want when they are broken. Here's some examples:

- Database is down.

Don't let your service return anything that makes clients think that their order was saved. You have to make the call based on your business if it is better to return error responses or just refuse to accept requests all together. Which of those could get you into more trouble? But don't just code for that. Actually kill your database in the test environment and test it. Setup a functional test that does this every time you build. - Disk is full and you can no longer log.

Same thing as above. Setup a functional test that points the logs at a very small virtual drive and make this test happen with every build. - System encoding changed.

Seriously, this is a thing. On Macs the default is utf-8, on Windows it is ascii, on Linux it is something. I have some Python 3 code that I originally wrote on a Mac but when I moved it to a Windows machine it choked on the encoding all because I didn't set an encoding. I was [unknowingly] depending on the system default. Servers are group property. Maybe only your OPS team can touch them but you probably have more than one Operations Engineer. Or your servers are configured via Chef or something else and everyone misses that single line where someone decided it would be good to explicitly define the system encoding. Boom! Code that was working fails for what most would consider a trivial bit of code.

Such robustness is not easy to incorporate into a design. Some of the ideas that help include the following:

- Never fall out of a case statement. For example, if there are four possible values for a variable, don't check just for three and assume that the fourth is the only remaining possibility. Instead, assume the impossible; check for the fourth value and trap the error condition early.

- Predict as many "impossible" conditions that you can and develop strategies for recovery.

- To eliminate conditions that may cause disasters, do fault tree analysis for predictable unsafe conditions.

Labels:

architecture,

design,

software-engineering

Location:

Springfield, MO, USA

Subscribe to:

Posts (Atom)