Software with a lot of prerelease errors will also have a lot of post-release errors. This is bad news for developers, but fully supported by empirical data*. The more errors you find in a product, the more you will still find. The best advice is to replace any component with a poor history. Don't throw good money after bad.

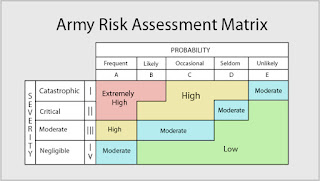

If your organization keeps impeccable records then you could use Bayes' theorem to come up with a probability of the number of errors still left in a software component by comparing the current component to a larger population of similar components. More than likely you don't have that kind of data to draw from so you can use this pessimistic heuristic:

How ever many errors have been fixed in the component before release is how many more errors you can expect to still have.

A more optimistic and, hopefully, more accurate approach would be to apply the above heuristic to a quarter-to-quarter or month-to-month timeline. I'm suggesting basic trend projection forecasting so you could get much more creative if you wanted to. However, to my experience, businesses don't want to spend the time and effort needed to gather and process data to do anything more complex. And, there are concerns about employee satisfaction if you go down this rabbit hole because eventually you'll start tying error rates to certain combinations of people on development teams. The slope gets slippery and ROI diminishes. K. I. S. S.

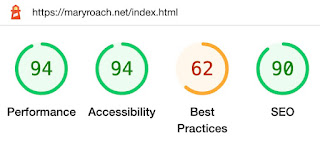

Back to trend project forecasting, when your software reaches the point where your measurement period has zero errors, don't declare the software free of bugs. Instead adjust your measurement period. For example, last month no errors were found so it is time to switch to measuring quarters and so on. This is useful when a stakeholder asks how many bugs are left in the software, and they will ask. You can say, "We don't expect to find any new errors next month but it's probable that we'll find X new errors over the next quarter." If you are really on top of your game, you'll track the variance of errors from period-to-period then you can provide a min-max range (see the graph above).

* Software Defect Removal by Robert Dunn (1984).