"Never lose sight of why software is being developed: to satisfy real needs, to solve real problems. The only way to solve real needs is to communicate with those who have the needs. The customer or user is the most important person involved with your project."It may feel like it is easier to develop in the sweet silence of a vacuum but will the finished software be something that the customer likes or even finds useful? If your customer or product manager is not easily accessible then designate some people on your team to be advocates for your customer. Ask them to imagine being the customer and get their feedback. If possible, have them use the software as if they were the customer and document the good and bad points.

Jerry Yoakum's thoughts on software engineering and architecture from experience working with code, computer science, python, java, APIs, NASA, data mining, math, etc.

Thursday, April 26, 2018

Communicate with Customers / Users

Productivity and Quality are Inseparable

Productivity and quality have a clear relationship in software development.

- Demand for increased productivity will decrease quality (i.e. increase the number of bugs).

- Demand for increased quality (i.e. fewer bugs) will decrease productivity.

This is not a bad thing. Accept it and plan for it. Do not agree to deadlines that are unreasonable and will result in poor quality.

Quality is in the Eyes of the Beholder

It needs to be realized that quality is not the same for all parties. A developer might think it is high performance code or an elegant design. A user might think it is a lot of features. A manager might think it is low development cost. These three examples could be described as speed, features, and cost. Optimizing one might detriment another. Because of this, a project must decide on its priorities and articulate them to all parties.

Tuesday, April 17, 2018

Build Flexibility Into Software

A software component exhibits flexibility if it can be easily modified to perform its function (or a similar function) in a different situation. Flexible software components are more difficult to design than less flexible components. However, such components are more run-time efficient than general components and are more easily reused than less flexible components in diverse applications.

Labels:

architecture,

design,

Singapore,

software-engineering

Location:

Singapore

Monday, April 16, 2018

Build Generality Into Software

A software component exhibits generality if it can perform its intended functions without any change in a variety of situations. General software components are more difficult to design than less general components. They also usually run slower when executing. However, such components:

- Are ideal in complex systems where a similar function must be performed in a variety of places.

- Are more potentially reusable in other systems with no modification.

- Reduce maintenance costs for an organization due to reduced numbers of unique or similar components. Think about the hassle of maintaining multiple different repositories and build plans.

When decomposing a system into its subcomponents, stay cognizant of the potential for generality. Obviously, when a similar function is needed in multiple places, construct just one general function rather than multiple similar functions. Also, when constructing a function needed in just one place, build in generality where it makes sense - for future enhancements.

Labels:

architecture,

design,

software-engineering

Location:

Springfield, MO, USA

Friday, April 06, 2018

Transition from Requirements to Design Is Not Easy

Requirements engineering culminates in a requirements specification, a detailed description of the external behavior of a system. The first step of design synthesizes an optimal software architecture. There is no reason why the transition from requirements to design should be any easier in software engineering than in any other engineering discipline. Design is hard. Converting from an external view to an internal optimal design is fundamentally a difficult problem.

Some methods claim transition is easy by suggesting that we use the "architecture" of the requirements specification as the architecture. Since design is difficult here are three possibilities:

- No thought went into selecting an optimal design during requirements analysis. In this case, you cannot afford to accept the requirements specification implied design as the design.

- Alternative designs were enumerated and analyzed and the best was selected, all during requirements analysis. Organizations cannot afford the effort to do a thorough design (typically 30 to 40 percent of total development costs) prior to baselining requirements, making a make/buy decision, and making a development cost estimate.

- The method assumes that some architecture is optimal for all applications. This is clearly not possible.

Labels:

design,

software-engineering

Location:

Springfield, MO, USA

Thursday, April 05, 2018

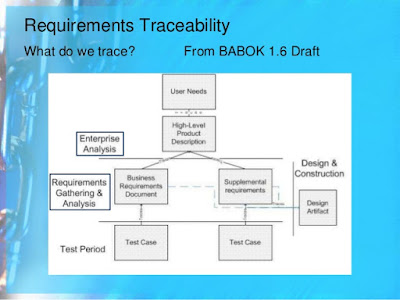

Trace Design to Requirements

All these needs can be satisfied by the creation of a table with rows corresponding to all completed software components and columns corresponding to every released requirement in the software requirements specification (SRS). A check in any position indicates that this design component helps to satisfy this requirement. Notice that a row void of checks indicates that a component has no purpose and a column void of checks indicates an unfulfilled requirement. Some people argue that this table is very difficult to maintain. I would argue that you need this table to design or maintain software. Without the table, you are likely to design a software component incorrectly, spending exorbitant amounts of time during maintenance. The successful creation of such a table depends on your ability to refer uniquely to every requirement.

----

STOP. Do not dismiss the above because it doesn't sound like an agile practice. There is nothing to stop you from creating, maintaining, and using the above table within the framework of scrum. This is really about design and documentation. Being able to document where work for specific requirements is to be, and was, done will drive development toward modular (in its many forms) design.

I have worked with development teams that track this.. kinda. The specification for a project is stored in JIRA with each issue representing each requirement. When an issue is marked resolved the issue is linked to the commit history, code review, and test documentation. It lacks a high-level view but a sufficiently large table would also suffer from the same difficulty. Anyway, it is immensely useful to be able to query JIRA for issues related to a specific feature and have a subset of commits to look at first.

Labels:

design,

software-engineering

Location:

Springfield, MO, USA

Evaluate Alternatives

A critical aspect of all engineering disciplines is the elaboration of multiple approaches, trade-off analyses among them, and the eventual adoption of one. After requirements are agreed upon, you must examine a variety of architectures and algorithms. You certainly do not want to use an architecture simply because it was used in the requirements specification. After all, that architecture was selected to optimize understandability of the system's external behavior. The architecture you want is the one that optimizes conformance with requirements.

For example, architectures are generally selected to optimize throughput, response time, modifiability, portability, interoperability, safety, or availability, while also satisfying the functional requirements. The best way to do this is to enumerate a variety of software architectures, analyze (or simulate) each with respect to the goals, and select the best alternative. Some design methods result in specific architectures; so one way to generate a variety of architectures is to use a variety of methods.

Labels:

architecture,

design,

software-engineering

Location:

Springfield, MO, USA

Wednesday, April 04, 2018

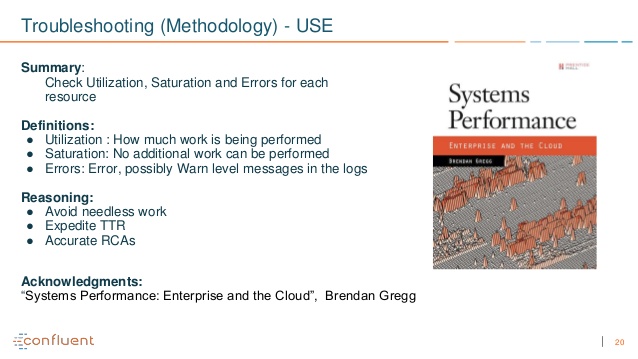

Performance Analysis: The USE Method

Blatant rip off of http://dtrace.org/blogs/brendan/2012/02/29/the-use-method/ with a small amount of simplification.

The USE method can be summarized as: For every resource, check utilization, saturation, and errors. While the USE method was first introduced to me as a method for examining hardware some software resources can be examined with this methodology.

Utilization is the percentage of time that the resource is busy working during a specific time interval. While busy, the resource may still be able to accept more work; the degree to which it cannot do so is identified by saturation. That extra work is usually waiting in a queue.The key metrics of the USE method are ususally expressed as:

Saturation happens when a resource is fully utilized and work is queued. When a resource is fully saturated then errors will occur.

Errors in terms of the USE method refer to the count of error events. Errors can degrade performance and might not be immediately noticed when the failure mode is recoverable. This includes operations that fail and are retried, as well as resources that fail in a pool of redundant resources.

- Utilization as a percentage over a time interval.

- Saturation as a wait queue length.

- Errors as the number of errors reported.

The first step in the USE method is to create a list of resources. Try to be as complete as possible. Here is a generic list of hardware resources:

- CPUs - Sockets, cores, hardware threads (virtual CPUs).

- Main memory - RAM.

- Network interfaces - Ethernet ports.

- Storage devices - Disks.

- Controllers - Storage, network.

- Interconnects - CPU, memory, I/O.

- Mutex locks - Utilization may be defined as the time the lock was held, saturation by those threads queued waiting on the lock.

- Thread pools - Utilization may be defined as the time threads were busy processing work, saturation by the number of requests waiting to be serviced by the thread pool.

- Process/thread capacity - The current thread/process usage vs the maximum thread/process limit of a system may be defined as utilization; waiting on allocation may indicate saturation; and errors occur when the allocation fails.

- File descriptor capacity - Same as above but for file descriptors.

Here are some general suggestions for interpreting metric types:

- Utilization - 100% utilization is usually a sign of a bottleneck (check saturation and its effect to the confirm). High utilization (i.e. >60%) can begin to be a problem. When utilization is measured over a relatively long time period, an average utilization of 60% can hide short bursts of 100% utilization.

- Saturation - Any amount of saturation can be a problem. This may be measured as the length of a wait queue or time spent waiting on the queue.

- Errors - Non-zero error counters are worth investigating, especially if they are still increasing while performance is poor.

Design Without Documentation Is Not Design

Sometimes you'll hear a software engineer say, "I have finished the design. All that's left is its documentation." This makes no sense. Can you imagine a building architect saying, "I have completed the design of your new home. All that's left is to draw a picture of it," or a novelist saying, "I have completed the novel. All that's left is to write it"? Design is the selection, abstraction, and recording of an appropriate architecture and algorithm onto paper or other medium.

Labels:

architecture,

design,

software-engineering

Location:

Springfield, MO, USA

Subscribe to:

Posts (Atom)